Atheist Peter Klevius' comfort and advise to Atheist Yoshua Bengio and others worried about AI: Use negative Human Rights (UDHR 1948) as the basic algorithm globally!

Read how Peter Klevius solved "the biggest mystery in science".

Read Peter Klevius in-depth research on The Psychosocial Freud Timeline.

Read Peter Klevius Origin of the Vikings from 2005 - now again available after Google deleted it 2014 and again in February 2024.

Peter Klevius (1981, 1992): The ultimate question ought to be: What is it like to be a stone? There's no difference between human consciousness polished through living, and the "consciousness" of a stone that has been smoothly shaped in streaming water against other rocks, stones etc. It started its "life" as a rugged piece of rock in a mountain and adapted to its life in streaming water down hill, or perhaps as a piece of rock falling on a beach and polished by waves.

Peter Klevius: Synaptic weights produce association patterns, not linguistic categories - like language itself which, according to Peter Klevius now empirically proven theory on consciousness from 1992-94, is no different from other adaptations between thalamus and the "inner" and "outer" worlds which are constantly updated to be one. Berkeley was right except for there's no place for an overall "perciever" because Peter Klevius analysis of existencecentrism (1992:21-22) excludes every possibility of reaching "outside" it.

Also see how US stole the world-dollar 1971 - and how China's rise challenges US stolen dollar hegemony.

The US demonized China has nothing to do with the real China, but all to do with US dollar embezzlement that makes it possible for US to spend despite constant trade deficit. US is the world's biggest counterfeiter - and a dangerous loser!

To understand US fear of China (the "China threat"), you need to understand 1) the background, i.e. US enormous 1971 dollar theft and its escalating consequences now, 2) the fact that China is already superior in every area* of tech and science, as well as meritocratic real democracy. Moreover, China has no reason to start wars - while US whole existence (the stolen dollar hegemony) depends on warmongering (militarization), and starting and continuing wars. And to understand how low the US led West has sunk, just consider BBC implying Russia deliberately targeting a children's hospital (why would Russia ask for more negative news?!) while not mentioning with a word Israel's slaughtering in Gaza and West bank the same day - not to mention the more than 40,000 Palestinians already murdered - most of them children and innocent adults!

* Both US and China itself try to downplay China's success - for different reasons.

Watch how US stole the dollar. When Nixon 1971 admitted US dollar theft (while lying it was temporary) said 'your dollar may not give you as much abroad as before", that statement actually defined the amount of US embezzlement, because when the US dollar was no longer pegged to gold - only pegged to the whims of US Federal Reserve - it meant that the world dollar (outside US) had to pay for US deficit. So the Bretton Woods (1944) all world currency dollar that was pegged to gold under the custodianship of the Fed, after the theft 1971 (i.e. US violation of the gold connection) the dollar became split in two: a US dollar covering US deficit, and a world dollar that pays for it - both under the custodianship of US. What the US Fed is doing is controlling both currencies while favoring the US dollar.

US

is the real enemy - and modern meritocratic high tech China is the real

friend for any country that chooses peace and prosperity instead of

militarism, war and misery!

The problem is US (which theocratic Supreme Court has abandoned Human Rights) and religious fundamentalism. And the solution is China (which has not abandoned Human Rights but instead been applauded by OIC's investigation on how well China treats muslims)!

Peter Klevius thinks like an animal - without confusing words - which makes most 10+ kids more naturally fluent* than him. However, by not thinking with words one eliminates many language traps. This has made it easier for Peter Klevius than for most other to think about thinking in a less language biased way. Peter Klevius was actually extremely surprised when he first learned that other people so ofte think in words.

* Although, because of his well functioning brain Peter Klevius can "pretend" being naturally verbally fluent, it takes much more effort than when a kid or a slippery tongued politician does it. Peter Klevius can't take any personal credit for possessing a high IQ brain and a perfect dopamine and serotonin balkance, because he was born that way. However, although jealous intelligencephobes love to fertilize unsubstantiated myths about high intelligence, Peter Klevius doesn't tick a single box - no one has ever caught him immoral, criminal, hysteric, narcissistic, racist, sexist etc. Peter Klevius has met and been with many people during his life, but has no fear to say this publicly. Yes, Peter Klevius has, because of circumstances, poor luck paired with no kin backup, and possible overconfidence as a "kicked out" high IQ youngster, had some rough times - but never ever let it hamper his (Atheist) Human Rights moral. On the contrary, in retrospective it seems that he has been almost too kind to some people. And at no point has Peter Klevius complained about others "privilege" - but only tried (usually in vain) to explain his own situation so to correct some misrepresentations. And yes, Peter Klevius has never been over ambitious but rather more keen on relaxing and enjoying life.

Peter Klevius' Atheistic advise: If you theist think you can think beyond your existencecentrism, then you confuse the "god" you say you believe in, with yourself.

Atheist and Agnostist Peter Klevius can't possibly believe in a "god beyond human reason", and could, but doesn't, believe in ghosts either. However, others may believe in ghosts but cannot, like Peter Klevius, possibly believe in a "god or ghost beyond human reason". A "belief" always inescapably resides inside your existencecentrism, which makes it impossible to "believe" in something "defined" as being outside it.

One cannot believe in something that is defined as not being able to be defined.

Believing in ghosts is within human reason because it's supposed to reside within the realm of human reason (existencecentrism). The properties of a ghost are all within human reason. And stating that ghosts have unknown properties is also within human reason. However, stating that ghosts have believable properties outside human reason is an oxymoron because all beliefs are only possible within human reason (existencecentrism).

When I in 1992 published a book that proved the impossibility of a "god" residing "outside" the human mind, I also solved the "puzzle" about consciousness and how the brain works*.

* The elimination of language - see my stone example in Demand for Resources (Resursbegär 1992:31-39) - and the introduction of the thalamus as the "sounding board" (consciousness) for the lived experience imprinted in the brain.

The introduction of the linguistic concept of a "god beyond human reason" is utter nonsense! How could there possibly be anything "beyond human reason"**?! That's why intelligent and ontologically honest Jews are all Atheists!

** Aliens, yes, but nothing beyond human reason. And although the content in human existencecentrism continuously changes, "god" is never allowed to enter, says orthodox Jews, muslims - and Peter Klevius.

To believe, i.e. to think that something is true, correct, or real, is incompatible with something 'beyond human reason'. One can only believe in something within human reason, i.e. within one's existencecentrism, which could include whatever fantasies - but not something beyond human reason, i.e. outside one's existencecentrism. So if you say you believe in a "god beyond human reason", what that actually means is that you believe in your own thoughts - not in a "god beyond human reason".

Crucial for understanding consciousness is:

1. existencecentrism, i.e. that we are tied to a moving point in the world, and that our outlook is always limited from that point and with that particular flow of information - because the alternative would be a nonsensical "god" that is said to be "beyond human reason" while somehow still existing in "beliefs" inside existencecentrism.

Funnily, BBC sponsored populist scientist Brian Cox on Sinophobic PC media Bloomberg argues that if humankind is alone in our galaxy and if we get extinct we destroy human meaning and therefore we have a responsibility not to do so. This is logically impossible to digest. When Brian Cox told Sinophobic BBC about how the UK government forced Chinese in some harbours at gunpoint to trade tea for opium, and that it was the most disgraceful war in UK's history, then that's easy to understand and agree on. However, it seems that he hasn't realized the inevitable logic of existencecentrism when he said that: 'It worries me that if in our galaxy there's only humankind that thinks and can feel and in a real sense can bring meaning to the universe, if we destroy ourselves, then it's possible that we eliminate meaning, perhaps forever, in a galaxy of 400 billion stars. We have a tremendous responsibility not to do that. I would be much more comfortable with our current predicament if the galaxy was filled with civilizations because then someone else would stand in for us, but I'm not sure there is one.'

Peter Klevius: 1) 'Thinks and feels' is ambiguous. If a single newborn human somehow survives, then there will be no language, no humankind history and no human civilization - but still someone thinking and feeling. Moreover, this individual would in this respect be impossible to logically distinguish from other surviving animals, and aliens. Furthermore, if there were many surviving human babies then they could reproduce and develop a civilization that has nothing in common with Brian Cox's own civilization.

2) 'If we destroy ourselves then it's possible that we eliminate meaning, perhaps forever, in a galaxy.' Here the word 'perhaps' clearly indicates that Cox means a separate set of an other non-human civilization.

3) Taken together these statements constitute an illogical line of thoughts because there is nothing connecting meaning between an extinct humankind civilization and some alternative civilization that only existed as a thought in a human civilization. Moreover, even a human civilization that has been totally disconnected from an other human civilization, lacks any possibility of transferring 'meaning' from Cox's civilization.

Existencecentrism is the unique and finite possibilities of the individual being in a particular place (origo) in space and time and with a unique collection of adaptations engraved in the brain and communicated via the thalamus.

Existencecentrism is therefore a state of being that doesn't occur similar in other beings.

The world is consciousness limited by existencecentrism.

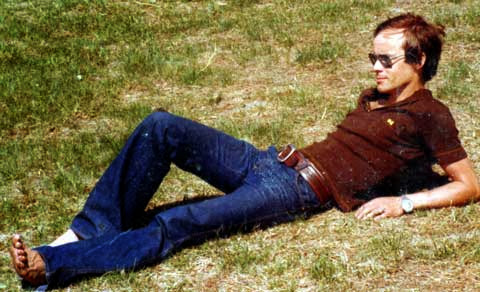

A modern male Homo sapiens*. This particular individual belongs

to the bastard race that was the result when mongoloids mixed with

archaic Homos.

Peter Klevius contemplating human evolution, consciousness and sex segregation. His father was a Goth from Gothenburg (possibly Sweden's best chess player of his time considering he won the Gothenburg championship many times over more than four decades) and his mother was from Finland and possessed 1/3 mongoloid features (she was extremely intelligent - just like her two brothers who both had studied double exams in engineering and economy and were leaders in Finland's biggest companies). Klevius himself may in this context be seen as a generational step downwards, i.e. in line with an overall progression towards a more diluted absolute intelligence. Klevius half sister (same mother but different father) followed the same trend and scored only 167 on an IBM IQ talent test (which she won). Photo taken some years after Peter Klevius in 1979 wrote the original Demand for Resources and created the 'Woman' drawing.

Although Peter Klevius had the most unprivileged upbringing (kidnapped at age two to an other country and secretly kept in a foster home and then kicked out at age 17 to his country of birth but penniless and with no family ties) and early adulthood, he also got the most privileged body when it comes to muscle power, motor skills and serotonin/dopamine balance, as well as super fast mental reaction time - which I only realized when visiting Munchen tech museum in the 1980s and tested a reaction time meter there and was shocked when the whole hall was filled with a deafening "dinosaur" roar from the loudspeakers - late in the month I had set the monthly record. Still at pensioner age my reaction time hasn't declined, as hasn't heart recovery rate (between 62-68), blood pressure (fluctuating around 70/110 5 min after exercise). Only resting heart beat has dropped from 38 at age 18 (I didn't do any sports because of lack of time) to between 45 and 55. Triglycerides I started measuring around age 40 and it has stayed the same (around 1 mmol/L) but my cholesterols have always been high. I have never been hospitalized for a disease or using medical or other drugs - nor has any woman caught me with erectile problems despite me having lived with women almost my entire life. I've consumed loads of sugar and fat - but I've kept my weight and still fool around with balls recreationally. At age 45 I got viral haemorrhagic fever and was badly down for more than a week but got no permanent issues. I even called the hospital but they said they couldn't do anything against a viral infection. So why am I telling this. Well, apart from questioning the stereotyping classification by age, firstly to comfort those who, like myself don't fit many health recommendations re. fat and sugar (where can one get a fizzy drink today with sugar instead of sweeteners?!), and secondly as an example of not to judge people who might not have been genetically equally lucky. However, I've also suffered from a rare genetic sensitivity for vision problem (less than 1 in 4000) where both parents need to carry the gene, and, in my case due to my unprivileged background, I inflicted it on myself through poor nutrition at a young age and lack of money. Had I known back then about it I would have stopped smoking earlier, and stopped living on cornflakes, coffee, cookies and beer for almost two years while working full time in the weeks plus educating myself in a profession (non-academic because I wasn't admissible for university because I'd been working in my teens instead of studying) in the evenings and filling weekends with extra jobs. At age 18 in the military I plus 26 others were chosen out of some 4000 because of extra good night vision. What an irony! Only later in life I was diagnosed (but no cure available) and started paying more attention about nutrition to slow down or stop the progression until stem cell therapy is available.

Peter's first child

Peter working as a forwarding agent at Volvo BM instead of university

Perpetua (203 AD): 'I saw a ladder of tremendous height made of bronze, reaching all the way to the heavens, but it was so narrow that only one person could climb up at a time. To the sides of the ladder were attached all sorts of metal weapons: there were swords, spears, hooks, daggers, and spikes; so that if anyone tried to climb up carelessly or without paying attention, he would be mangled and his flesh would adhere to the weapons.' Perpetua realized she would have to do battle not merely with wild beasts, but with the Devil himself. Perpetua writes: They stripped me, and I became a man'.

Peter Klevius: They stripped Perpetua of her femininity and she became a human!

The whole LGBTQ+ carousel is completely insane when considering that the 1948 Universal Declaration of Human Rights (UDHR) art. 2 gives everyone, no matter of sex, the right to live as they want without having to "change their sex". So the only reason for the madness is the stupidly stubborn cultural sex segregation which, like religious dictatorship, stipulates what behavior and appearance are "right" for a biological sex. And in the West, it is very much about licking islam, which refuses to conform to the basic (negative) rights in the UDHR, and instead created its own sharia declaration (CDHRI) in 1990 ("reformed" 2020 with blurring wording - but with the same basic Human Rights violating sharia issues still remaining). The UDHR allows women to voluntarily live according to sharia but sharia does not allow muslim women to live freely according to the UDHR. And culturally ending sex segregation does not mean that biological sex needs to be "changed." Learn more under 'Peter Klevius sex tutorials' which should be compulsory sex education for everyone - incl. people with ambiguous biological sex! The LGBTQ+ movement is a desperate effort to uphold outdated sex segregation. And while some old-fashioned trans people use it for this purpose, many youngsters (especially girls) follow it because they feel trapped in limiting sex segregation.

Excerpt from Demand for Resources (original title Resursbegär, by Peter Klevius 1992:21-22, ISBN 9173288411).

Chapt. Existencecentrism

The civilized human retraces her/his steps, lights a light and allows her/himself to be enlightened - only the suffering in the past and the shadow over the future are greater.

The word exist, from the Latin existere (to emerge, to appear) has, like the word existence, nowadays as the main meaning existence, i.e. something that has arisen/been created and now exists in the world of our senses.

To exist, i.e. existence, constitutes our vantage point when we consider the surrounding reality in time and space. We are existence-centered. Existence prevents godlike all-seeing but also easily leads to self-glorifying considerations. The word anthropocentrism covers some, but not all, of the meaning of the concept of existencecentrism.

Existence stands in contrast or as a complement to the modern Protestant concept of God. Existence and God, or as I prefer to express it, human and the unconscious (the unreached) together form 'everything' - God/The Unreached is thus not seen in things but in the existence of things via the awareness of existencecentrism.

That the human thought is locked to its subject, i.e. that someone thinks the thought, is connected to our linear cumulative conception of history. The whole story/thought creation turns into a giant inverted pyramid where stone is added to stone while the tip of the pyramid proportionally gets narrower at the same time as it points downward/backward and we ourselves stand on the top/latest and widest part.

The engine of the cumulative conception of history, i.e. what determines the value of past and present-day social phenomena, exists in the present.

The perception of history as linearly cumulative has as a consequence the need for creation. Development requires a beginning. The creation stories can be divided into two main groups: Creation from something or from nothing. In more "primitive" cultural contexts, it is common to imagine some form of primeval being that is brought to life during creation, while within the religiously influenced cultural circle, creation out of nothing with the help of a deity (the "first mover") is advocated. This can sometimes take surprising expressions such as e.g. in the s.c. "Big Bang" theory.

The driving forces behind science and religion are close to each other and the idea of an eternal universe where creation only exists in the human mind is difficult to accept (P. Klevius 1992:22).

Peter Klevius additional comments 2023

Existencecentrism, together with the stone example in the same 1992 book, laid the ground work for EMAH, which 1994 added the new findings re. cortico-thalamic two-way connections reported in Nature 1993. Although Peter Klevius had always been convinced it all happened in thalamus, he out of intellectual cowardice didn't dare to write it down in the 1992 book - which, btw was strongly supported 1991 by G. H. von Wright, Wittgenstein's successor at Cambridge.

Peter Klevius wrote:

Because Peter Klevius - whose EMAH solved* "consciousness, the biggest mystery ever" 1990-94* - can't get the Nobel prize due to "anonymity" and "islamophobia" (i.e. defense of Human Rights) it should be given to the craniopagus twins Krista and Tatiana Hogan who proved him right!

Also read how human evolution was made possible with iceage oscillations in pleistocene.

Dear

reader, do realize how strongly Google is actively suppressing Peter

Klevius' blogs - wonder why? Is it his defense for Human Rights, or his

defense of girls/women, or is it his scientific revelations?! Take a

check: Although Peter Klevius' blogs are scattered with popular images,

Google has a hard time finding them (except a few Youtube). But if you

scroll down far below Googles' 'The rest of the results might not be

what you're looking for. See more anyway', you'll find plenty of them!

But few of the really important scientific ones.

* The core of which is the 'stone example' (see below) published in Demand for Resources 1992 but written 1990 and presented for G. H. von Wright (Wittgenstein's successor at Cambridge) 1991, and letter about EMAH (the Even More Astonishing Hypothesis) to Francis Crick at Salk 1994, after having been rejected from a main philosophical magazine due to it being 'too technical', and from a main neurological magazine due to it being 'too philosophical'. Peter Klevius' writing about EMAH was described by the Finnish neuroscientist, professor J. Juurmaa as: 'Peter Kleviuksen ajatuksen kulku on ilmavan lennokas ja samalla iskevän ytimekäs', which translated to English would mean something like: 'Peter Klevius' flow of thought is airily wide-ranging and at the same time strikingly succinct'. This he wrote in a long letter answering Peter Klevius' question about EMAH and the effects on the visual cortex on individuals who have been blind from birth. This inquiry was part of Peter Klevius' check up of his already published EMAH theory, so to get a qualified confirmation that the "visual cortex" in born blind people is fully employed with other tasks than vision. Juurmaa's description of Peter Klevius is in line with philosopher Georg Henrik von Wright's 1980 assessment, and perhaps more importantly beneficial when assessing AI/deep learning etc. Dear reader, this "bragging" and self-naming is only for you, i.e. to understand that you may have some reason to take this text more seriously than "the usual influencer", and to rather connect it to a name than to an 'I'. After all, Peter Klevius is almost invisible in the topics he has some expertice on. Why isn't he at least equally cited as ordinary scientists (see answer below)?

Krista and Tatiana Hogan constitute the perfect follow up to Peter Klevius' stone example from 1990-92 (see below), because when they 'talk inside their head with each other' that can only happen in their connected thalamuses, not in their disconnected cortices.

In

all other aspects they are separate individuals and personalities -

except of course for that part of the cranium that keeps them together,

and the entangled blood vessels and nerves that hindered separation.

Krista's and Tatiana's brains have a unique thalamic bridge connection

which proves Peter Klevius' 1994 theory EMAH (the Even More Astonishing

Hypothesis - which alludes to Francis Crick's book The Astonishing

Hypothesis) according to which "consciousness" resides in the thalamus -

not in the cortex, although what plays out in the thalamic "display"

triggers association patterns in the cortex which are reflected in new

thalamic patterns. According to Peter Klevius, people with split brain

halves appear as having two separate "minds" simply because each half

only connects to the thalamus and not via the corpus callosum directly

to the other half of the cortex, resulting in two separate association

patterns in each half which then mix with the other half in the thalamus

which exactly explains e.g. that these people may verbalise with one

side but not the other although the other side also understands it but

without verbalising it. However, while Tatiana and Krista Hogan share

only a communication bridge between their thalamuses the result is

exactly the same, i.e. that they "understand" each other, but from two

different patterns of associations, just like people with split brain

sharing the same thalamus. As they can "talk" with each other "inside

their head", this means the "talking" happens only in their thalamuses,

because if they should have access to the other's cortex they would feel

talking to themselves, i.e. they would be one person with one

personality.

Peter

Klevius' EMAH (the Even More Astonishing Hypothesis) 1994. The dotted

lines schematically describe the cortico-thalamic connections.

The unconnected white dots symbolise potential (nearest) connections to for the time being existing association pattern(s).

Neuronal connections and spikes in the cortex are of no interest when studying consciousness, because it resides in the thalamus. And although the thalamus doesn't represent your life history like the cortex does, it is the only display you have to your "inner world" and the only camera to your "outer world". The cortex is always the latest state of knowledge or configuration on which known and new data reflect from the thalamus. Although the thalamus "knows nothing" (much like your computer display) without it you wouldn't have access to your knowledge. Cortico-thalamic communication (e.g. thinking) is a continuous streaming where association patterns in the cortex reflect in thalamus which then reflects them back in a slightly altered way - i.e. based on but not exactly as the previous pattern, which again stimulates the next reflection from the cortex. This internal communication may then be added by external perceptions (incl. from the body).

We humans are chordates in which the thalamus evolved. We are also a special type of primates called Homo (e.g. Homo floresiensis) and our brain evolution accelerated at the beat of recent (<4 Ma) climate changes which repeatedly affected sea level. See https://peterklevius.blogspot.com/2023/01/how-pliocene-pleistocene-panama-isthmus.html

Why Peter Klevius?!

Partly

because of his particular life that has freed him from usual scientific

bias within an academic career. And partly because he has been lucky

(or unlucky) to have had extremely intelligent parents, father was,

among other things, one of Sweden's best chess player ever (won the

Gothenburg chess championship many times over more than four decades

despite playing more for fun and for the entertainment of the spectators

than for winning), and Peter Klevius half sister (same mother) won

IBM's talent contest with IQ 167. Add to this Peter Klevius lifelong

spending of time on free research on evolution and what it means to be a

human. And because of the anonymity obscurity "problem" - partly

imposed by reactionary attitudes - Peter Klevius' works aren't known by

many enough, although Wittgenstein's successor at Cambridge, von Wright,

already 1980 gave him high written credit for original

philosophical/scientific analysis on evolution and methologies , which

also led to the first paid article on a new approach to science and

evolution, and published 1981. The other part is that Peter Klevius bias

free analysis always gives anomalous results vs existing paradigms

(also compare Peter Klevius' analysis which places our evolution in SE

Asia, and the analysis of sex segregation which reveals that only

heterosexual attraction can work as an analytical tool for analyzing

relations between the sexes and Human Rights. Moreover, according to

Peter Klevius, only a full commitment to the negative (basic) Universal

Human Rights (Art. 2, 1948) can make all of us fully part of a "human

community" - unlike "monotheistic" religions which always cut out the

chosen ones from the "infidels", more or less, in one way or another.

Peter

Klevius feels almost embarrassed because the "hard problem of

consciousness" turned out to be self evident when using the EMAH model

which hones away biased concepts that muddle the view. However, due to

previous lack of interest in thalamus there are still today only limited

data available although the interest in thalanus has increased recently

(thanks to Peter Klevius bombardment on the web since 2003 with his 30

year old EMAH analysis?).

Neurological background

Apart

from the speed* problem EMAH also explains why there's almost

negligible difference in the brain's need of energy no matter how hard

we think.

* What has also been

"puzzling" for brain research (and therefore rarely properly mentioned,

or just talked away) is that reaction time seems to exceed the brain's

own speed limit. However, this is self-evident in EMAH because awareness

is already in the thalamus, and only those processes which need

additional contact with the cortex are slightly delayed in comparison.

The importance of accounting for the thalamus when theorising about cortical contributions to human cognition.

High-order

thalamic nuclei, such as the mediatorship thalamus, is the core of

cognition. However, due to the old 'just a simple relay station'

attitude against thalamus, paired with a strong defence for the

indefensible anthropocentric mentalist fantasies about linguistic

concepts such as 'soul', 'self' etc., little effort has been made to

really understand the function of the most obvious candidate as an

interactive display mediating between incoming signals from the senses

(incl. body signals) as well as from the cortex. The thalamus is ideally

positioned in the midst of the head between the brainstem and the

cortex.

The phase of both ongoing mediodorsal thalamic and

prefrontal low-frequency activity are predictive of perceptual

performance. Mediodorsal thalamic activity mediates prefrontal

contributions to perceptual performance. These findings support Peter

Klevius EMAH model (1992, and reported to Francis Crick 1994 - although

not sure if he read it despite confirmation letter from Salk Institute)

that thalamocortical interactions predict perceptual performance

displayed in thalamus as a continuous and seamless flow of new "now"

awareness, much like a frameless video.

Your brain doesn't write

memories - it deletes them by constantly updating/adapting your brain.

The default mode is when the brain is in equilibrium with incoming

signals, i.e. no new information to delete. Your brain adapts to

whatever you experience.

"Consciousness" is your thalamus'

adaptation to what your bodily sensations mean in relation to what is

going on around you in the world as well as in the cortex. Learning and

memory, language and culture are linguistic add-ons to create the mix of

"conscious" feeling, which is of course material, because what else

could it be.

Mentalism

Mentalism

is the lack of understanding that even language is physical. Although

ghosts or gods don't exist, the word 'ghost' and 'god', like the word

'stone', are physical realities. Without neurons no words, thought or

uttered. And although mentalists (like everybody else) have no clue

about any difference between concepts like "sensory inputs" and mental

"reasoning", they anyway use such a divide. Reasoning is equally verbal

and physical as talking loudly. Same with non-verbal reactions. A cat's

reasoning before jumping on a mouse is the same as when it asks for

going out. It's a linguistic "abstract" fantasy trap by mentalists to

divide memory in abstract ("immaterial") concepts and material

sensations or images.

If I utter or write 'ghost' then it

becomes operational when adapted/understood by someone. What mentalists

think is mental, is simply words that, for no particular reason, are

lumped in a language category labelled "mental".

Although EMAH

focuses on the thalamus, i.e. vertebrates, the same applies to the

mushroom body in invertebrates which is also able to instantly combine

information from the internal body as well as from the environment -

even the nerve ring of starfish fulfils this task. According to Peter

Klevius (1992), brain evolution not only started as a rudimentary

olfactory organ, but is in fact still to be seen as the main brain

notwithstanding its name and that it's limited to a tiny part of the

human brain in conventional neurological descriptions. A long forgotten

smell from one's childhood, if felt as an adult ignites the whole brain

in an overwhelming flood of associations. And the reason why olfactory

connects differently than other perceptions is simply because it was

first in line in evolution of the vertebrate brain. So even though we

have lost much of our smell capacity, there's no need to limit the

olfactory to smell. The nose is a smell organ while the olfactory organ

is so much more.

According to EMAH, Thalamus is the action centre

while cortex is the mostly fixed "storage" against which the world is

surveyed/synchronized. Cortex hence is the updatable "film" on which its

subset thalamus projects incoming signal patterns from the "outer"

environment incl. the body as well as responses from the cortex itself -

new information from the thalamus as well as what we call "thinking",

which simply means the exchange of signals initiated by the thalamus,

i.e. reciprocal cortico-cortical interactions.

The main structure from the starfish to the human brain is similarly logical, i.e. an organism's command centre is always optimally located.

While

a starfish lacks a centralized brain, it has a nerve ring around the

mouth and a radial nerve running along the ambulacral region of each arm

parallel to the radial canal. The peripheral nerve system consists of

two nerve nets: a sensory system in the epidermis and a motor system in

the lining of the coelomic cavity. Neurons passing through the dermis

connect the two. The ring nerves and radial nerves have sensory and

motor components and coordinate the starfish's balance and directional

systems. The sensory component receives input from the sensory organs

while the motor nerves control the tube feet and musculature. The

starfish does not have the capacity to plan its actions. If one arm

detects an attractive odour, it becomes dominant and temporarily

over-rides the other arms to initiate movement towards the prey. The

mechanism for this is not fully understood.

Inhibitory

interneurons, rather than relay neurons make up most of the nuclei of

the thalamus. These neurons do not project into the cortex but instead

project into the other nuclei, modulating their activity. This is how

thalamus distributes signals in accordance with incoming signals and

reflections from the cortex. Mainly the pulvinar part of the dorsal

thalamus is focused on when it comes to reasoning etc. Although the

pulvinar is usually grouped as one of the lateral thalamic nuclei in

rodents and carnivores, it stands as an independent complex in primates.

Each pulvinar nucleus has its own set of cortical connections, which

participate in reciprocal cortico-cortical interactions. Unilateral

lesions of the pulvinar result in a contralateral neglect syndrome

resembling that resulting from lesions of the posterior parietal cortex.

This again emphasizes the "dictatorship" of the thalamus.

The real "mystery of consciousness" is why the self-evident answer has been stubbornly avoided despite being presented in countless writings, talks and on the webb - even including a letter to Francis Crick in 1994.

The

reason is of course segregation used as a social and political power

tool. However, the greatness of Tatiana and Krista is precisely that

they have showed the world that total de-segregation works without loss

of individual personality. Whereas the majority of two separate twins

quarrelling is simply due to misunderstanding, Tatiana and Krista avoid

this because they can always see the rationality of whatever happens to

be at stake in their head. The thought process happens in their

connected thalamuses, not in their cortex which only reflects their

personality. In other words, what Tatiana's cortex delivers to the

thalamic display is different from what Krista's dito delivers, but in

the bridged thalamuses everything is processed as one. Their thoughts

are equally well synchronized as how they master synchronizing their

four arms and legs.

As EMAH has showed, "consciousness", i.e.

awareness, is a two-dimensional 'now'* that resides in thalamus where it

functions as a sub-set of association patterns in the cortex, always

changing due to "outer" perceptions and "inner" feedbacks from how the

corresponding association networks in the cortex happen to fit the

situation. Association pattern in the thalamus ought to be seen as a

small local subset of the global network in the brain.

*

I.e. a continuous flow of changing "nows" without history or future.

Like a seamless/frameless/seamless/f video camera where the viewer, i.e.

the brain, synchronizes/updates itself in a similarly

seamless/frameless way.

There's no "immaterial

intellect" or "material intellect" division. This thinking is a dinosaur

from the past and reflects Western unfounded belief in supra-natural

phenomenon, of which "monotheisms" - to an extent that even spelling

correctors don't know the plural form of it although there are at least

four main "monotheist" branches (Zoroastrianism, Judaism, Christianism

and its late coming cousin islamism plus a multitude of opposing

variants.

The Even More Astonishing Hypothesis (EMAH) expands AI from human-centrism* - but not from existence-centrism*.

*

Human-centrism is the dividing of the world in "human" and "non-human".

An example is humans bragging about humans which makes no sense due to

the lack of any reference outside "humans". Which "non-human" would be

able to evaluate such a claim? We humans can only brag among ourselves,

which is equally meaningless as saying that this particular set is the

best of this particular set.

EMAH sees everything as the

latest adaptation in an arbitrarily chosen (local) global set which is

in equilibrium with an other (local) global set via an interface ('now')

working as a subset.

There's no time lag in adaptation because

it's synonymous with 'now'. In conventional language use one could say

that 'adaptation', 'now' and understanding are the same.

Words

like "mind", "memory", "history", "future", "abstract", "physical", and

"understanding" cannot be conventionally used in explaining EMAH.

"mind" implies something (Homunculus paradox) that talks with itself, which is impossible

"memory" implies a possibility to "go back" which is impossible

"understanding" implies a state of "not understanding" which is an oxymoron

"history" or "future" do not exist in EMAH because there can only be a 'now' which is the latest 'state'.

"abstract or physical" is a division that lacks meaning in EMAH

The

word 'artificial' in AI seems to imply made by humans but not human,

but instead does the very opposite, i.e. outlines separate rooms for

'human intelligence' and 'human made intelligence' where there cannot be

such a division. This division has a long history and contains concepts

such as e.g. soul, mind, etc.

Algorithm AI and none-algorithm AI

Algorithms

are useful but contain human bias. For a non-biased exploration of a

certain topic we therefore need an interface without algorithms.

General statements in conventional AI vs EMAH:

Cameras don't lie - pictures do.

'Intelligent

agents' are any device that perceives its environment and takes actions

that maximize its chance of success at some goal.

EMAH: There's

no room for "agency" in an EMAH interface. And "success" is an

algorithm, i.e. defined. EMAH lacks algorithms and is therefore free to

explore without bias - like a camera.

There are endless amounts

of possible EMAH interfaces - like e.g. a mounted video camera filming

waves. No matter if you watch the display in real time or later, the

only thing you get is the latest 'now' (frame). And the only way you can

"understand" every consecutive 'now' is as the latest changes piled on a

previously "known" state.

It's said that as machines become

increasingly capable, mental facilities once thought to require

intelligence are constantly removed from the definition.

EMAH:

'Intelligence' here seems to imply either there's some undefined point

where it becomes human, or there's no such point. And of course there's

no other point than the previously mentioned human selfishness.

A state that adapts to its environment

state- now

adapts- always the sum of inputs/always "up to date"

environment- inputs (change)

example- a light switch - or millions in a changing on/off state pattern

Some objections to prevailing understanding of "consciousness"

Do

keep in mind that the verbal is physiological and the

"non-physiological" only exists as a, in this respect, meaningless but

conflating verbal expression, just like e.g. 'ghost' and 'god'.

Consciousness is neural events occurring not within the brain, but in the thalamus.

There are no qualia.

Access

consciousness, as opposed to phenomenal consciousness, is said to be

the phenomenon whereby information in our minds is accessible for verbal

report, reasoning, and the control of behavior. So, according to this

view, when we perceive, information about what we perceive is access

conscious; when we introspect, information about our thoughts is access

conscious; when we remember, information about the past is access

conscious, and so on. EMAH disputes the validity of this distinction.

P-consciousness

is said to be simply raw experience: it is moving, colored forms,

sounds, sensations, emotions and feelings with our bodies and responses

at the centre. These experiences, considered independently of any impact

on behavior, and are called qualia. EMAH object to this view because

"qualia" is both an undefinable word as well as a linguistic

categorization with no place in the brain. Brains don't do "categories".

The very core of EMAH is to remove "folk language" concepts* from the analysis. A camera never lies but pictures do. The camera doesn't see qualia.

The complexity of the

neural network in the brain of a newborn is there to be synchronized

with the individual's coming experiences. So early on a lot happens

while later in life only minor changes occur.

David Chalmers has

argued that A-consciousness can in principle be understood in

mechanistic terms, but that understanding P-consciousness is much more

challenging: he calls this the hard problem of consciousness. However,

the stone example (1992) proves that 1) observation and understanding

are the same and that 2) there's no qualitative difference between

seeing, hearing, smelling etc. and that 3) what is called understanding

as opposed to observation is in fact just retrospection in the latest

state - as is any "new understanding", e.g. when in the stone example it

turns out to be made of paper mache.

Basics of "consciousness".

There's

no other difference between the "consciousness" of a stone in a stream

of water and the "consciousness" of a human being, except for the

stone's lack of origo (the stone is adapting mainly on its surface) and

lack of language. What often misleads us is our self inflicted admiring

of our own inability to grasp the complexity of the neural network in

our brain - but not the complexity of a stone and its interaction with

its environment. Nor do most people seem to realize that language is

capable of empty oxymorons used as facts of the brain. Or perhaps they

just love this feature of language as a magician loves his tools and

tricks. And as we all know, we pay for magicians to cheat us.

1 There are no "memories" or "history" - only the most recent state.

This state is constantly changing (evolving).

These changes are random inputs - because non-random inputs wouldn't change the state.

The real "hard problem" of "consciousness" ("consciousness" originally meant 'knowing with').

The

hard problem, i.e. phenomenal consciousness, may, according to

Chalmers, be distinguished from the soft problem", i.e. access

consciousness. In EMAH, like in Dennett, there's no need for such a

divide.

2 The overall state (the cortex) is fixed until it gets changes from the thalamus.

Random inputs will be allocated into the existing state in accordance with its actual focus.

Focus

= the thalamic sub-state ("consciousness") that is dependent on the

actual association pattern in the cortex. Changes could come from cortex

in interaction with other association patterns or from outside the

brain, i.e. from the opposite direction in the thalamic display.

Actual focus = e.g. "awareness"/to be "conscious", which in whatever system simply means now.

System = whatever that changes.

The language problem (compare Donald Duck in the holy land of language in EMAH)

Wittgenstein called language a well functioning but hopelessly inaccurate game.

1 a neural network

2 random input to 1 causing a minor change in 1

3 1 will now be almost the same as previously except for a minor alteration caused by 2

4 next input will do the same unless it hits the previous one, in which case no reaction

5 the flow of random inputs continues

translated to EMAH and exemplified with how the brain works as a painter and a canvas

1 a canvas

2 experience painting on that canvas

3 a new canvas slightly different from the previous

4 if "painted" on a spot with the same "color" nothing changes

5

the "painter" never stops painting - but s/he is lazy so the canvas

changes very little over time - although the patterns on the canvas has

become all the time more "like" the "model"

Summary

We

(like everything else) don't "observe" or "understand" or "memorize" -

we adapt. And not only to our outer surrounding but equally to our own

body incl. our brain. Or a brick turning into grovel/sand. Or a star

turning into a supernova etc.

Is the pattern of the flying dust from what used to be a brick less or more "complex"? Or the supernova?

Although

the brain/nerve system could be seen as more complex, it's no different

from e.g. light skin that gets tanned in the sun.

EMAH is

extremely simple - yet not "simplistic". However, the culprit is what

humans are most proud about, i.e. language. By giving something one

doesn't comprehend but wants to put in a package, a name, will continue

to contain its blurred (or sometime empty) "definition". This is why

EMAH only deals with 'now' and the body/state of the "past" (erased in

the process) this 'now' continuously lands on. Of course this leads to

everything (or nothing) having "consciousness".

A brick

"remembers" a stain of paint as long as it's there - and with some

"therapeutical" investigation in a laboratory perhaps even longer. And a

stain of paint on your skin is exactly the same. However, unlike the

brick you've also got a brain that was affected by the stain. This could

be compared with a hollow brick where the paint has vanished from the

outside but submerged into the brick's "brain" so that when cutting the

brick it "remembers" it and "tells" the cutting blade about it. And for

more complexity and "sophistication", just add millions of different

colors unevenly spread.

Although the brick example of course will

be challenged by mentalists - they in turn will be refuted by the

Homunculus paradox, Wittgenstein's private language problem, etc.

Background to Peter Klevius' 'stone example' against unfounded but populist "immaterial consciousness".

This

top science isn't offered to the mediocre Nature because that PC

magazine's quality isn't good enough and Peter Klevius doesn't have the

means to get a proper Chinese translation. So Google gets it in power of

its Western hegemony - not Google's quality which due to PC and

especially its connection with the militaristic leadership of the

$-freeloader U.S. constitutes a security risk beyond comprehension.

Here's

an other example. 1957 -Swedish Arvid Carlsson was first in the world

to demonstrate that dopamine is a neurotransmitter in the brain and not

just a precursor for norepinephrine. He also discovered that lack of

dopamine causes Parkinson. However, although Israel awarded him already

1979, and Japan 1994, it was only in 2000 he got the Nobel prize and had

to share it with two others. Why? Because Swedish state supported

mentalists (what Peter Klevius calls the psycho state) have had a strong

strangle hold on research about the brain.

It's a linguistic

"abstract fantasy" trap to divide memory in abstract ("immaterial")

concepts and material sensations or images. Krista and Tatiana Hogan

constitute the perfect follow up to Peter Klevius' stone example from

1990-92, because when they 'talk inside their head with each other' that

can only happen in their connected thalamuses, not in their

disconnected cortices.

Mentalists' unproven and unreachable s.c.

"objective reality" (or "fantasy reality") stands as the basis for their

unproven idea about non-physical mental processes in the brain at the

same time as they admit that sensory inputs are physical. This view

stands in sharp opposition to idealists' who only see what the

(physical) senses bring - but honestly admit that they have nothing to

say about a "world" outside the senses - except for Berkely who called

the not reachable "god". But according to Peter Klevius'

existencecentrism, not even "god" fits in a set that can't be talked

about. Moreover, Peter Klevius is convinced that the intellectual

schizophrenia of mentalists is detrimental to Human Rights.

Peter

Klevius ontology and epistemology rests on Atheism, i.e. the lack of

monotheisms, combined with negative (basic) Human Rights, i.e. the lack

of impositions based on human characteristics, other than laws guided by

negative (basic) Human Rights. Peter Klevius is not a mentalist (see

below). Peter Klevius' analysis puts him, like Daniel Dennett, at odds

with mentalists.

Acknowledgement: The simple reason I often refer

to myself with my name in the text is because as a less known underdog

outside the conventional academic sphere (which is in fact my main

asset) there's a real chance that many will not only dismiss the author,

but more importantly, just cherry pick from my texts out of proper

context. Moreover, for me it's essential that I'm understood because

that's the only way for me and others to criticize myself. Furthermore, I

don't know about you dear reader, but although I'm fluent in three

languages, my thinking, like that of all animals, is mostly non-verbal,

meaning I have to translate it to words. This translation is for

mentalists the very obstacle to understand how the brain works.

Origins

Ultimately

the stone example and EMAH go back to Peter Klevius' correspondence

with G. H. von Wright (Wittgenstein's successor at Cambridge) 1980 and a

published and paid article 1981 about evolution and scientific

methodology 1981. However, at the time I wrote the stone example I was

puzzled by how my theory could be physiologically explained. I didn't

know about the two-way cortico-thalamic connections until 1993 when they

were outlined in Nature. The manuscript to Peter Klevius' Demand for

Resources (with the 'stone example') was in its final form presented for

G. H. von Wright (Wittgenstein's successor at Cambridge) before Daniel

Dennett's Consciousness Explained was available. Moreover, whereas Peter

Klevius' analysis at the time lacked physiological evidence for

thalanmus involvement, Dennett based his (non-mentalist) view on

available data which constituted mainly of in the 1980s so popular brain

imaging of blood flow, which gave the wrong impression that thinking

happened all over the brain, and which also encountered the speed limit

problem that was neglected by "close to the same time". Peter Klevius

analysis eloquently resolved this problem by keeping attention/awareness

in the smaller thalamus "display" while the cortex stands for the

totality of adaptations of which only a tiny part is projected on the

thalamus. So what the blood flow images show is just the history of what

the thalamus has been busy with, i.e. the association patterns thalamus

activates on the cortex.

In fact, Peter Klevius didn't even know

the existence of Dennett until many years after Peter Klevius' letter

to Francis Crick. Why Dennett is mentioned here is because he seems to

be a non-mentalist and closest to Peter Klevius analysis. However,

unlike Peter Klevius' 'stone example' where consciousness is limited to a

real time 'now' "image" of the world (i.e. no depth), Dennett compares

consciousness to an academic paper that is being developed or edited in

the hands of multiple people close to the same time, the "multiple

drafts" theory of consciousness. In this analogy, "the paper" exists

even though there is no single, unified paper. When people report on

their inner experiences, Dennett considers their reports to be more like

theorizing than like describing. These reports may be informative, he

says, but a psychologist is not to take them at face value. Dennett

describes several phenomena that show that perception is more limited

and less reliable than we perceive it to be. Dennett's views put him (as

Klevius) at odds with thinkers who say that consciousness can be

described only with reference to subjective "qualia". These "qualia"

people's (ab)use of language is the main obstacle for understanding how

the brain works and therefore also the main target for Peter Klevius

analysis, which could otherwise been much shorter. One year after

publishing Demand for Resources, Peter Klevius read in Nature about

two-way cortico-thalamic connections which immediately for him located

the stone example to the thalamus, hence overcoming earlier problems

about neural speed limits in the brain.

Short form of Peter Klevius ontology (1981, 2003): Peter Klevius would be helpless without an assisting world*.

*

Peter Klevius has no 'self' or 'private language' because all of him is

a product of his environment (incl. his body). Moreover, the world that

has shaped him is exactly his whole world. There can't be a world

"beyond" existencecentrism (see below). Same applies to the whole of

humankind. This world is constantly changing but can never exceed the

borders of existencecentrism.

And here's a longer form for those

who desperately try to misinterpret it for the sake of rescuing their

beliefs. As in the preface to my 1992 book Demand for Resources, I again

appeal for a positive reading - so to save the reader from her/his own

prejudice:

Being is ultimately only comprehensible as an

all-inclusive whole which Peter Klevius calls 'existencecentrism', i.e.

that the view from one's (or humankind's) particular origo is always

limited (otherwise we would be all seeing gods) which also excludes

"metaphysics" or if you like, integrates "metaphysics" into our

existencecentrism, i.e. into what can be said/experienced. There cannot

exist anything outside our reality because "existence" is dependent on

human minds. Trying to talk "outside" one's existencecentrism is

therefore impossible and only ends up in a navel gazing dead end of

undefinable "nothingness".

Language has overwhelmed our thinking

to an extent that often hinders or complicates the analysis of it. The

'stone example' below is meant to reveal the true nature of language as

just an adaptation among others, so to discharge it from conflating

misleading words about how organs (e.g. the brain) work. We have a

tendency to create meaningless questions because language - but not the

world - allows it. Words like 'memory', 'past', 'future' etc., have no

meaning when exploring awareness/consciousness because there's only one

valid latest 'now' at the time, just like a video where only the last

frame is relevant for viewing. If the stone in the stone example later

turns out not to be a stone, then we can no longer "remember" the

"stone" we saw before we realized it wasn't a stone.

There's no

"reality" or "things-in-themselves" outside our existencecentrism,

simply because whatever we talk about is per definition already inside.

So trying to explain something humans come up with and to demand a "god"

to answer a question that makes no sense - makes no sense. This also

means that there's no basis for questions like 'don't you believe in a

human independent reality'. A human independent "reality" is per

definition out of reach, so the question becomes an oxymoron. A human

perceived object or world can't exist if humans are forever gone. Our

world is in our mind only - where else could it possibly reside.

However, many seem to have problem letting the question go, e.g. by

stubbornly repeating the naive 'but surely the table must still be there

even if all humans are gone'. And if we pretend being an all seeing

god, then we would realize that the bird on what humans used to call a

'table' strongly disagrees while conceptualizing it perhaps as a place

for landing.

There are no colors, objects etc. in the brain, only

the imprint on the neuronal network of our adaptations with our world

incl. each other. We adapt to our surrounding just like a rock in a

continuous stream of water, or a flatworm to light. The light absorbed

by silver crystals on photographic film produces a reflection that can

only be "understood" as an image based on earlier adaptations to what is

interpreted to be in the image. An image of the stone in the 'stone

example' may be interpreted as a stone or paper mache, depending on the

knowledge of the viewer. To be able to know the world at all, there must

be a continuing identity of mind and perception. This equilibrium is

upheld by synchronizing new perceptions with the previous state of the

mind.

Mind or consciousness are physical and physiological.

Everything else is just language. It's language that makes consciousness

"mysterious". The reason many humans don't accept consciousness in e.g.

flatworms is that humans tend to drown in their oceans of neurons etc.

A

mind independent world is impossible because how could we possibly talk

about something "outside" our mind. If you, like naive "realists", say

that objects still exist even if there's not a single human left to

sense them, then ask yourself how to sense such objects without any

human existing to perform the sensing? Moreover, if an unknown force

suddenly puts universe into a state of time and space-less singularity,

then where are your objects? This latter example is of course equally

naive as the naive "realist" position, and therefore belongs to them.

Peter Klevius commenting on the misuse of widely used concepts:

* Such concepts may of course be perfectly usable in openly declared local contexts.

'A car' is equally concrete or abstract as 'the car'.

Although

earlier cosmological models of "the" universe now are accused of being

geocentric, i.e. placing Earth at the center, nothing has really changed

because "the" universe is anyway still both anthropocentric as well as

limited by our existencecentrism. Yesterday's Earth is today's "Big

Bang" (P. Klevius 1992:22).

The 'empty set' is the most

operational of all sets in that its impossible task is to keep things

from entering it, e.g. its own conceptual defining framework.

Objects, operations, and functions

Peter Klevius: Objects, operations, and functions, are all dependent on each other.

Organs of sense-

Peter Klevius: There can't be "organs of sense", because then there could also be "organs of appearances" etc. stupidities.

produce sensations out of which appearances take place-

Peter

Klevius: There's no difference between sensations and appearances.

Where would you draw such a line? "Sensations and appearances" meet in

the thalamus where they become one, i.e. 'now'.

and these come to represent something that renders objects thinkable.

Peter

Klevius: Represent what? Where was the original presentation? The "real

world" that's beyond us?! But our existencecentrism excludes us from

even talking about it - and if we do we are back to appearances.

Although

one could say that the heart is the origo of the blood flow, unlike the

nervous system that feeds the brain, the heart is part of an an

inclusive system. And the stone in the flow of water doesn't have an

origo, other than its centre of gravity.

Why are we here? This

question is senseless because it rests on the possibility of a

"nothingness" which would be impossible to define because its definition

would kill the concept as well as the question. So when Penrose says

Universe at some extremely diluted point may "forget" space and time,

then this scenario is still within our existencecentrism. Wittgenstein's

'bedrock' is Peter Klevius' existencecentrism.

Just like a stone

in the continuous flow of water adapts to its environment, similarly

the mind doesn't need to "structure" and "process" incoming data,

because it simply maps it on the existing data map. Better still, there

are no "incoming" data, only nerve reactions. And just like we make

sense of an image, similarly we make sense of other reactions.

There

is no one thing that unifies being human - except negative (basic)

Human Rights, which don't limit your sphere of love or passion, but

let's others do the same without impositions, except for what is

restricted by laws guided by these same rights.

Dear reader, don't confuse this text with nihilism because it's actually less nihilistic than mainstream views on the subject.

The

significance of Peter Klevius' stone* example from 1992, is to embed

contentious or confusing concepts into a theoretical analysis that makes

their connections to other categories more explicit. As a consequence

it will also reveal the impossibility of any effort to draw a

distinction between abstract and concrete objects because there simply

can't exist a human definition in a "reality" outside human experience.

The 'car' is equally abstract as the 'thought' about it. And the

neuronal activity we call a thought process is certainly equally

physical and physiological as photons hitting the retina or the

molecules hitting our mouth and nose, or the vibrations hitting our

ears. The fancy "elevation" of some physical/physiological events to a

"higher" status has no real foundation.

*

The reason Peter Klevius chose 'stone' instead of 'rock' is that 1) in

Swedish it's 'sten', and 2) in both Swedish and its creole descendant

English, the word sten/stone is also associated with phrases like stone

blind (literally "blind as a stone"), stone deaf, stone-cold, etc.,

which then contrasts more sharply with the 'mind'. Yet, nothing excludes

the possibility of describing a stone as equally complex as the brain.

There

are no functions without objects. A function is an operation which

needs objects to function, such as variables or other operational

"tools". You can't think about a number without its operational

function, be it functioning as a sign or a calculation. There simply

doesn't exist a naked number. Same with colors, which will always be

somehow framed.

Everything experienced is always understood,

which means that every conceptualization happens in the brain - not in

an outside "reality". The retroactive "understanding" that the stone

later turned out to be something else, is just a new understanding.

The

oxymoron 'true by definition' is limited to its definition. The

"out-of-Africa" myth, for example, rests on defining modern DNA as

representing the same locality (Africa) several hundred thousands of

years ago. And fossils are pure lottery if they can't be satisfactorily

tied to evolutionary origin. This is why Homo floresiensis on the

"wrong" side of the Wallace line, outperforms all fossils in Africa.

The stone example reveals that:

1

Recognition of a stone as matching the concept of a 'stone' is

culturally embedded in our brain as a result of adaptation (programmed

through lived experience). There is no direct understanding of a "real"

stone, only the cumulative adaptations of when to use the concept, or

how to deal with it in general - just like animals do without linguistic

concepts.

2 'Stone' is a linguistic reflection and doesn't cover

humans who are non-linguistic*. Language is an anthropocentric

operation, and therefore not applicable to non-linguistic lives or

things. This means a linguistic machine could understand a linguistic

human linguistically, whereas a non-linguistic human would not

understand a linguistic machine.

3 To see or touch a stone both need

the recognition that it is a stone. Photons from the stone or from the

ink in the world stone do exactly the same as touching the stone - which

includes hearing the word stone. And if the surface feels hard it could

still be a hollow shell. And if it feels heavy like a stone it could

still be a dirty piece of hollow gold weighing the same as an ordinary

'stone'.

* The most naive, or alternatively, the most

self-evident of value based expressions is that 'humans are special' -

but not more or less special than a billion year old stone or a flying

fruit fly. And the only way to encompass all humans as fully human is to

Atheistically and axiomatically accept it as e.g. it's stated in in the

original anti-fascist, anti-racist, anti-sexist U.N.'s Universal

Declaration of Human Rights from 1948 - which islam's biggest and most

influential organization, the Saudi based and steered O.I.C., 1990

declared not acceptable and therefore replaced it with an islamic sharia

declaration, which contrary to Art. 2 in the UDHR, imposes segregated

"rights".

First of all one needs to accept that we are by

necessity anthropocentric (and above all existencecentric). How could we

possibly not be humans? You may also benefit from learning about later

Ludwig Wittgenstein (who asked my mentor* G. H. von Wright to be his

successor at Cambridge) whose reasoning is in good harmony with Peter

Klevius EMAH theory which in turn pushes the "consciousness"/language

"problem" to its ultimate end - without embarking on simplisticism.

Unfortunately there seems to be a problematic aversion against

Wittgenstein's most important insights among many Western scholars,

probably due to the fact that Wittgenstein in his later period didn't

follow a more conventional philosophical jargon and methodology within

the discipline, but rather questioned its borders. Aversion against

Wittgenstein may also have something to do with the heavy influence of

"monotheisms"** which became widespread in the West because ot the Roman

empire. However, it also feeds into a quite appalling and racist

dismissal of non-monotheistic thought traditions. And Atheism, which is

the only possible foundation for fully adopting basic (negative) Human

Rights, is in e.g. U.S. politics etc. still almost seen as a curse. This

Western bigoted hypocrisy is easily seen in statements about

"monotheistic" religions as somehow the 'crown of sophistication' -

although stunningly disproved by history. Moreover, Kierkegaard was an

individualist, not a "communityist".

*

G. H. von Wright strongly supported P. Klevius' 1979 paper Resursbegär

(Demand for Resources) that was published 1981 as a paid article. Same

thing happened a decade later with Peter Klevius book with the same name

and published 1992 - although he thought its 'aphoristic form' could be

difficult for some readers.

** Atheist Wittgenstein's curiosity

about religion has often been wilfully misinterpreted. Wittgenstein was

also interested in other similar human entanglements such as e.g.

psychoanalysis. A telling sign is that the father of psychoanalysis,

Sigmund Freud, didn't fit in his list of people who had influenced him

the most, but included Otto Weininger, the youngster whom Freud had

dismissed and probably became complicit to what led to the vulnerable

young and depressed genius' suicide. And because Weininger's Sex and

Character was seen as misogynistic, Wittgenstein was asked how he could

like such a work. To which Wittgenstein answered that one may negate

everything in it and it's still good. Peter Klevius' thesis Pathological

Symbiosis implies the question how many young lives have been distorted

or destroyed because of psychoanalytically influenced actions. Adult

people can choose if they want to consult these modern magicians, but

have no such right when authorities decide about their children.

Our

mind consists of adaptive associations/reactions in every 'now' built

on previous ones. However, using the associations/reactions (or simply

adaptations) we call language to "explain" associations/reactions to

language, of course causes confusion. Mind is a word that can be used as

a synonym for human, and hence solely restricted to humans while

therefore also eliminating the possibility of the question: Do others

than humans have minds? Alternatively one may expand its use over the

human border and face no defensible restrictions at all. However, since

humans are trapped in our own existencecentrism we lack authority to

talk for others. What we can do though is to clean up our

anthropocentric discourse. To avoid the "consciousness mystery" one has

to clearly distinguish between single human-only bordered experience and

one that includes the totality of human existencecentrism* (see P.

Klevius 1992:21-22). This is why the question: 'Do animals have

consciousness?' is a meaningless oxymoron. Starting by declaring only

humans have "consciousness" while then blurring this concept with other

human centered concepts such as "soul", "spirit", "self" etc.,

inevitably leads to questions about animals and due conflation of the

original concept. This is no different from the slow acceptance of

evolution where still today many stubbornly keep hanging on the 'humans

are special' myth. Humans can only be special among humans. How would a

non-human possibly even know what is meant by 'humans'?

The

'Universe' is fully comprehensible for humans because the whole of it is

bordered by human existencecentrism. Humans hence rule the world by

absolute dictatorship.

The fancy idea that 'there's a physical

reality' independent of humans, I abandoned in my early teens after

reading Einstein's and Barnett's book about Universe. The concept of

'physical reality' (which implies some other perceivable "reality") is

inevitably and only contained into human language - so without humans no

"physical reality". "Reality" has no mysterious "essence" other than

what humans inject "it" with. A 'stone', a 'brick, a 'table' etc. have

no "essence" but are, like e.g. numbers, only operational, i.e. context

bound. And the only essence humans have in common is the axiomatic

"being human". Sure we can talk about it, touch, make experiments and

even agree that the Earth is still there even after Uncle Sam has

started a nuke war that eventually could accelerate and make humans

extinct. However, where would the human perceptions be stored? And even

if the CDs on Voyager somehow came in contact with what we used to call

"Aliens" - the cultural content is equally cut off as are prehistoric

'humans' (i.e. the genus) artefacts from us living humans.

In

Demand for Resources (1992) Peter Klevius pointed out the difference

between the modern use of the word existence as implying the possibility

of non-existence, and the more sensible and culturally much older and

more widespread meaning of something emerging (compare 'existere'), i.e.

not out of "nothing" or "god".

Reality is always confined within

the borders of existencecentrism. "Metaphysics" hence is (or should be)

simply the acceptance of existencecentrism. So whatever "universe",

"reality" or "spirit" is contemplated, it always resides within the

borders of existencecentrism. While existence is motion/change, the

borders of existencecentrism constitute an unchangeable relativity. No

matter what new insights are made they cannot change this because there

is no "reality" beyond existenecentrism that could be used as a

reference. The size of the "still unknown" is always infinite. On the

level of humankind this means that it cannot be assessed, compared,

evaluated etc. against other "kinds" other than by using a meaningless

"humankind" comparison.

The mentalists' love for a "mental", as opposed to physical, hiding place.

As

Peter Klevius wrote 1981, 'the meaning of life is uncertainty' - which

offers more possibilities than any narrow minded mentalist view. This

uncertainty is rich enough in itself and contrary to what mentalists

believe, mentalism not only actually limits freedom but also boosts

racism and sexism as defined in the 1948 Universal Human Rights

declaration.

And according to the stone example in EMAH there is

no in this context meaningful separation between observation and

understanding. The relation between a new observation that contradicts

an earlier one is not consciousness but can of course be titled

'understanding'. And the totality of our understanding is just the

temporal body of adaptations bordered against the future by a now. In

other words, future doesn't exist per se.

One way of helping to

understand EMAH is to think about an internally active two-way

display/monitor (thalamus in vertebrates) with ever changing "meetputs"

('nows' - i.e. stream of "images") between input and output, incl.

inputs and outputs from your brain and other parts of your body.

"Sensory information" has conventionally been seen as a specific type of

stimulus. This view is a linguistic mirage which arbitrarily

categorizes certain inputs. Although it's useful to talk about hearing,

vision etc., there's no need to make a "sensory group" which only

creates unnecessary bias when analyzing "consciousness".

Peter Klevius stone example unifies all modes of observation and communication.

If we want to break the borders of human navel-gazing we also need to clean up cross-border concepts.

In

the 1980s, while reading Jurgen Habermas' The Theory of Communicative

Action, Peter Klevius criticized his division observation and

understanding as I had always used to do in other contexts. However, my

(perhaps overly) respect for Habermas made me wondering why even he used

such a meaningless distinction.

Peter Klevius' 'stone example' in Resursbegär (Demand for Resources) from 1992 (pp 32-33, ISBN 9173288411).

The

connection between intelligence/intellect and its biological anchors

may appear problematic on several levels. This applies to the connection